Implementing Adaptive HTTP Streaming with Web Technology

2017. 9. 18.

An enormous amount of video content is being consumed not only in Korea but globally. The dominant web video technology, once Flash, has gradually transitioned to the standard HTML5 video technology, and most video services today are based on HTML5. As video technologies have advanced, various methods have been explored to provide optimal video quality streaming based on network conditions, offering a buffer-free experience. One such method is Adaptive HTTP Streaming, which utilizes existing HTTP protocols rather than creating new ones.

What is Adaptive HTTP Streaming?

Adaptive HTTP Streaming is, as the name suggests, adaptive streaming. Its primary goal is to adapt (or respond) to the user's network conditions for streaming. Similar technologies like RTSP/RTMP Streaming exist, but they require additional costs and efforts since they don't use HTTP. Traditional streaming methods often relied on Progressive Download (PD), where once a video source was selected, the entire content was downloaded and played sequentially. HTML5 video also supports PD by default.

Image Source: https://www.jwplayer.com/blog/what-is-video-streaming/

The drawback of PD is that it selects and downloads a single-resolution video source, which can lead to buffering issues under poor network conditions. If the network doesn't improve, buffering persists. Adaptive Streaming was created to solve this problem. The idea is to encode video content in various resolutions and store it as small segments rather than as one large file. When users play a video, the system selects the appropriate source based on network conditions to provide optimal streaming quality. Since the content is encoded in multiple resolutions and divided into small segments, it becomes easier to switch between different qualities for subsequent segments. For example, if the user's network condition is poor, 480P segments are streamed; as conditions improve, higher-resolution segments are chosen.

Image Source: https://www.jwplayer.com/blog/what-is-video-streaming/

You may have experienced watching Netflix or YouTube videos where the resolution starts low but gradually improves. Initially, low-resolution content is delivered because the network condition isn't yet identified; once identified, higher resolution content is streamed accordingly. Adaptive streaming not only helps manage server traffic but also reduces data usage for users while ensuring buffer-free viewing experiences. For users willing to tolerate buffering for higher resolution viewing, UI options can be provided.

Flow of Adaptive Streaming

Server Side

To implement adaptive streaming, more preparation is required compared to PD. Instead of a single video file, videos need to be encoded into multiple resolutions and segmented into smaller files. Additionally, information about these segmented files must be provided to clients.

- Split uploaded videos into small segments.

- Encode these segments into resolutions corresponding to various bandwidths, increasing the number of segments per resolution.

- Provide clients with a manifest file containing information about media segments for each resolution.

When splitting videos into small segments, tools specific to codecs are used. Formats like Apple-HLS and MPEG-DASH are available for documents describing segmented files.

Client Side

On the web client side, Media Source Extensions (MSE) enable adaptive streaming by delivering streaming data to players.

- The client requests a manifest file containing segment information for each resolution from the server.

- The manifest file is parsed to retrieve necessary information such as video details, available quality levels, and segment URLs (e.g., CDN URLs).

- The client measures the user's network bandwidth and selects the optimal video quality based on manifest contents before downloading required segments (bandwidth measurement continues during downloads).

- The downloaded segment data is fed into the MSE buffer.

- MSE decodes the data and provides it to the video object for playback (goto step 3).

Apple-HLS vs MPEG-DASH

The two main manifest formats for adaptive HTTP streaming are Apple's proprietary HLS and MPEG's standardized DASH. These formats act as protocols defining specifications for manifest files.

Apple-HLS

Developed for Mac devices, HLS natively supports Safari on both macOS and iOS platforms. HTML5 video can directly use HLS manifest files for streaming without additional setup on supported browsers like Microsoft Edge and some Android browsers. However, native HLS implementations limit strategic control over streaming services.

Key features:

- Developed by Apple.

- Natively supported by Safari and specific browsers for HTML5 Video.

- Mobile Safari does not support MSE; only HLS can be used.

- Media container formats: mp2ts and mp4 (since 2016).

- Uses m3u8 playlists as manifests.

#EXTM3U

#EXT-X-STREAM-INF:PROGRAM-ID=1,BANDWIDTH=200000,RESOLUTION=720x480

http://ALPHA.mycompany.com/lo/prog_index.m3u8

#EXT-X-STREAM-INF:PROGRAM-ID=1,BANDWIDTH=200000,RESOLUTION=720x480

http://BETA.mycompany.com/lo/prog_index.m3u8

#EXT-X-STREAM-INF:PROGRAM-ID=1,BANDWIDTH=500000,RESOLUTION=1920x1080

http://ALPHA.mycompany.com/md/prog_index.m3u8

#EXT-X-STREAM-INF:PROGRAM-ID=1,BANDWIDTH=500000,RESOLUTION=1920x1080

http://BETA.mycompany.com/md/prog_index.m3u8

....truncated....

MPEG-DASH

DASH is an open standard approved by MPEG and ISO. Unlike HLS's vendor-specific design, DASH lacks native browser support but offers greater flexibility in media containers and richer expression capabilities through XML-based manifests.

Key features:

- Approved by MPEG and ISO standards.

- Requires MSE for adaptive streaming implementation.

- No restrictions on media container formats.

- Facilitates seamless ad insertion (via Period structure).

- XML-based manifests allow comprehensive information delivery (e.g., DRM).

<?xml version="1.0"?>

<MPD xmlns="urn:mpeg:dash:schema:mpd:2011" profiles="urn:mpeg:dash:profile:full:2011" minBufferTime="PT1.5S">

<!-- Ad -->

<Period duration="PT30S">

<BaseURL>ad/</BaseURL>

<!-- Everything in one Adaptation Set -->

<AdaptationSet mimeType="video/mp2t">

<!-- 720p Representation at 3.2 Mbps -->

<Representation id="720p" bandwidth="3200000" width="1280" height="720">

<!-- Just use one segment since the ad is only 30 seconds long -->

<BaseURL>720p.ts</BaseURL>

<SegmentBase>

<RepresentationIndex sourceURL="720p.sidx"/>

</SegmentBase>

</Representation>

<!-- 1080p Representation at 6.8 Mbps -->

<Representation id="1080p" bandwidth="6800000" width="1920" height="1080">

<BaseURL>1080p.ts</BaseURL>

<SegmentBase>

<RepresentationIndex sourceURL="1080p.sidx"/>

</SegmentBase>

</Representation>

....truncated....

MSE (Media Source Extensions)

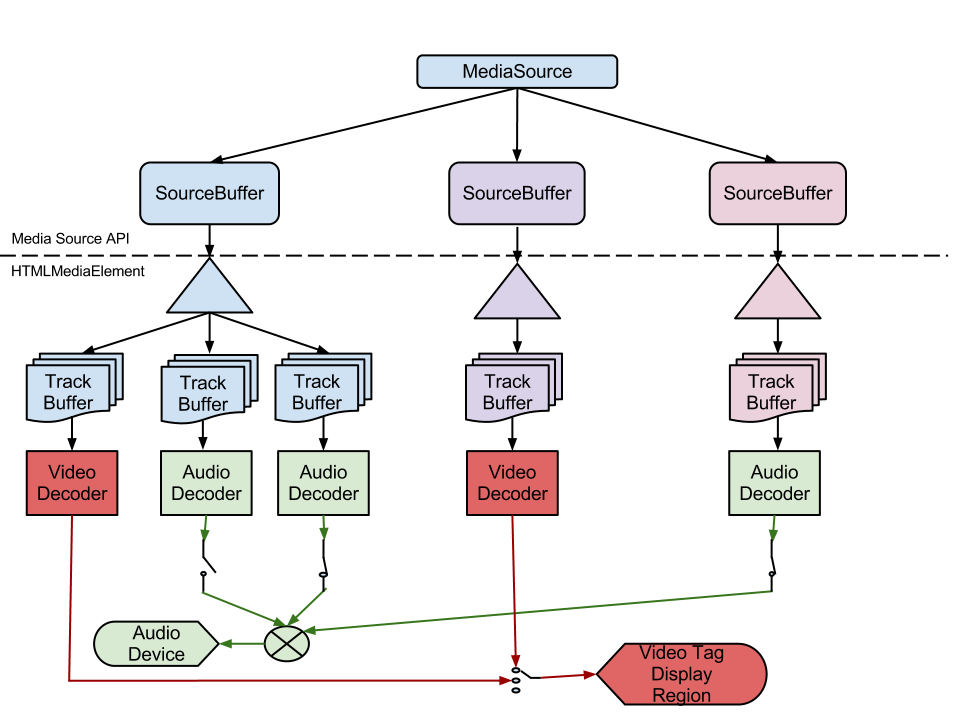

MSE allows developers to define new media sources using HTMLMediaElement, replacing traditional source tags in HTML5 video playback. Developers can fetch video data over HTTP and provide it directly to SourceBuffer objects for playback.

Segments

Segments are small chunks of encoded video data obtained via manifests like DASH or HLS:

- Initialization Segment contains metadata needed for decoding media sequences (e.g., codec data).

- Media Segment contains actual video data with timestamps indicating its position on the media timeline.

MediaSource Object & SourceBuffer Object

The MediaSource object represents a media source used by HTMLMediaElement. SourceBuffer provides media segments to MediaSource, which in turn supplies them to players during playback.

Image Source: https://www.w3.org/TR/media-source/

Image Source: https://www.w3.org/TR/media-source/

Integrating HTML5 Video with MSE

For detailed implementation steps using MSE interfaces:

<video id="v" autoplay> </video>

<script>

var video = document.getElementById('v');

var mediaSource = new MediaSource();

mediaSource.addEventListener('sourceopen', onSourceOpen.bind(this, video));

video.src = window.URL.createObjectURL(mediaSource);

function onSourceOpen(videoTag) {

var sourceBuffer = mediaSource.addSourceBuffer('video/webm; codecs="vorbis,vp8"');

var xhr = new XMLHttpRequest();

xhr.open('get', 'segment_url');

xhr.responseType = 'arraybuffer';

xhr.onload = function () {

sourceBuffer.appendBuffer(xhr.response);

};

xhr.send();

}

</script>

For full implementation details including bandwidth estimation algorithms like EWMA Control Charts or handling DRM via Encrypted Media Extensions (EME), refer to official specifications or advanced guides.

Conclusion

Adaptive HTTP Streaming enables seamless video delivery using web technologies like MSE while supporting DRM integration through EME standards. The rapid evolution of web technologies continues to empower frontend developers with tools previously limited to plugins like Flash or Silverlight.

with kakaopay

Recommend Post

![]() This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.